The Challenges of Managing Financial Data Integrity in the Age of AI present a complex interplay of opportunities and risks. The rapid expansion of data volume and velocity, coupled with the increasing reliance on AI-driven systems, necessitates a critical examination of the vulnerabilities inherent in managing financial information. This exploration delves into the multifaceted challenges, ranging from algorithmic bias and data security breaches to the integration of legacy systems and the evolving regulatory landscape. The goal is to illuminate the path toward ensuring accuracy, reliability, and trust in a rapidly changing technological environment.

This analysis will examine the key challenges in detail, providing insights into the potential pitfalls and outlining strategies for mitigating the risks associated with AI in financial data management. We will explore practical solutions and best practices to address these challenges, fostering a more robust and secure financial ecosystem.

Data Volume and Velocity: The Challenges Of Managing Financial Data Integrity In The Age Of AI

The financial industry is awash in data. Transaction records, market data, customer information, and regulatory filings all contribute to an exponentially growing volume of information. This rapid expansion, coupled with the increasing speed at which data is generated and processed (high velocity), presents significant challenges to maintaining data integrity. The sheer scale of this data deluge outpaces traditional data management capabilities, increasing the risk of errors, inconsistencies, and ultimately, compromised financial reporting and decision-making.

The challenges of processing high-velocity data streams in real-time while simultaneously ensuring accuracy are immense. Real-time trading, for instance, demands immediate processing of vast amounts of market data to execute trades effectively. Any latency or inaccuracy in this process can lead to significant financial losses. Similarly, fraud detection systems rely on analyzing massive datasets in real-time to identify suspicious activities. The speed and accuracy of these analyses are critical for mitigating financial risks. The implications extend beyond immediate transactions; the accumulation of inaccurate or incomplete data over time can distort long-term analyses, impacting strategic planning and regulatory compliance.

Big Data’s Impact on Traditional Data Management Systems

Big Data’s scale and velocity overwhelm traditional relational database management systems (RDBMS) designed for structured data. These systems often struggle with the volume, variety, and velocity of financial data, leading to performance bottlenecks and difficulties in maintaining data consistency and accuracy. Traditional systems often lack the scalability and flexibility to handle the unstructured and semi-structured data increasingly prevalent in finance, such as social media sentiment analysis or textual information from news articles. This incompatibility creates challenges in data integration, leading to inconsistencies and inaccuracies across different data sources. The processing power required to handle these datasets often exceeds the capabilities of traditional hardware infrastructure, necessitating investment in more powerful and expensive solutions.

Comparison of Traditional and AI-Driven Approaches

The following table compares traditional and AI-driven approaches to managing large financial datasets:

| Feature | Traditional Approach (e.g., RDBMS) | AI-Driven Approach (e.g., Distributed Databases, Machine Learning) |

|---|---|---|

| Data Volume Handling | Limited scalability; performance degrades with large datasets. | Highly scalable; can handle massive datasets distributed across multiple nodes. |

| Data Velocity Handling | Struggles with real-time processing of high-velocity data streams. | Enables real-time processing and analysis of high-velocity data streams. |

| Data Integrity | Relies on manual processes and checks, prone to human error. | Utilizes automated anomaly detection and data quality checks, reducing human error. |

| Data Variety | Primarily handles structured data; struggles with unstructured and semi-structured data. | Can handle various data types, including structured, semi-structured, and unstructured data. |

AI-Driven Data Bias and Errors

The integration of artificial intelligence (AI) into financial data processing offers significant advantages in terms of speed and efficiency. However, the inherent risks associated with AI-driven bias and errors cannot be overlooked. These biases can significantly impact the accuracy and reliability of financial reporting and decision-making, leading to potentially severe consequences. Understanding the sources of these biases and developing effective mitigation strategies is crucial for maintaining data integrity in the financial sector.

AI algorithms used in financial data processing are trained on historical data, and if this data reflects existing societal biases, the AI model will inevitably inherit and amplify these biases. The propagation of errors through financial systems, exacerbated by the scale and speed of AI processing, can lead to widespread inaccuracies and flawed conclusions.

Sources of Bias in AI Algorithms for Financial Data

The potential for bias in AI algorithms used for financial data processing stems from several key sources. Firstly, biased training data is a major concern. If the data used to train an AI model disproportionately represents certain demographics or omits crucial information, the model will learn and perpetuate those biases. Secondly, the design of the algorithm itself can introduce bias. For example, a poorly designed algorithm might inadvertently prioritize certain features over others, leading to skewed outcomes. Finally, the interpretation of AI-generated insights by human analysts can also introduce bias, either consciously or unconsciously. Analysts may selectively focus on data points that confirm their pre-existing beliefs, ignoring contradictory evidence.

Error Propagation in Financial Data Systems

Errors introduced by AI models can quickly propagate throughout financial data systems. For instance, a flawed algorithm used in credit scoring might incorrectly flag certain individuals as high-risk borrowers, leading to unfair denials of credit. This initial error can then cascade through the system, affecting loan applications, risk assessments, and ultimately, financial reporting. The high-speed, automated nature of AI processing can accelerate this propagation, making errors difficult to contain. The lack of transparency in some AI algorithms further complicates the identification and correction of these errors.

Challenges in Detecting and Correcting AI-Induced Biases and Errors, The Challenges of Managing Financial Data Integrity in the Age of AI

Detecting and correcting AI-induced biases and errors presents significant challenges. The complexity of many AI algorithms often makes it difficult to understand how they arrive at their conclusions, making it challenging to pinpoint the source of errors. Furthermore, the sheer volume of data processed by AI systems makes manual review impractical. The lack of standardized methods for auditing AI algorithms adds another layer of difficulty. Establishing robust validation and verification processes is essential, but this requires specialized expertise and significant investment.

Examples of Algorithmic Bias in Financial Reporting and Decision-Making

Algorithmic bias in financial applications can have real-world consequences. For example, an AI-powered loan approval system trained on biased data might unfairly reject loan applications from individuals belonging to specific demographic groups. This can lead to discriminatory lending practices and perpetuate existing inequalities. Similarly, an AI system used for fraud detection might disproportionately flag transactions from certain regions or demographics, leading to unnecessary investigations and potential reputational damage for innocent individuals. In algorithmic trading, biased models can lead to skewed market valuations and potentially destabilize financial markets. The lack of transparency in some of these systems further hinders the ability to identify and rectify such biases.

Data Security and Privacy Concerns

The integration of AI into financial systems, while offering significant advantages, introduces a new layer of complexity regarding data security and privacy. The sheer volume and sensitivity of financial data processed by AI algorithms create heightened vulnerabilities, demanding robust security measures to prevent breaches and maintain compliance with stringent regulations. Failure to adequately address these concerns can result in substantial financial losses, reputational damage, and legal repercussions.

The heightened security risks stem from several factors. Firstly, the reliance on vast datasets for training and operating AI models expands the attack surface. A breach affecting these datasets could expose sensitive customer information, including personally identifiable information (PII), transaction details, and financial account numbers. Secondly, the sophisticated nature of AI algorithms themselves can be exploited by malicious actors. Advanced attacks might target vulnerabilities in the AI models or the systems managing them, potentially leading to data manipulation, unauthorized access, or even complete system compromise. Finally, the decentralized and interconnected nature of modern financial systems, often involving cloud-based infrastructure and third-party providers, introduces further security challenges in monitoring and controlling data access across the entire ecosystem.

Compliance with Data Privacy Regulations

Ensuring compliance with regulations like the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) presents significant challenges in the context of AI-driven financial systems. These regulations mandate specific data handling practices, including data minimization, purpose limitation, and the right to access, rectification, and erasure of personal data. The use of AI algorithms, particularly those employing machine learning, often involves complex data processing techniques that make it difficult to track and control data usage precisely. Furthermore, the explainability of AI models, often referred to as the “black box” problem, can hinder efforts to demonstrate compliance and address individual data subject requests. Financial institutions must implement comprehensive data governance frameworks, including robust data mapping and lineage tracking, to meet these requirements. Regular audits and independent assessments are also crucial to ensure ongoing compliance.

Strategies for Protecting Sensitive Financial Data

Protecting sensitive financial data requires a multi-layered approach combining technological and procedural safeguards. Data encryption, both in transit and at rest, is paramount to prevent unauthorized access. Access control mechanisms, including role-based access control (RBAC) and multi-factor authentication (MFA), should be implemented to restrict access to sensitive data based on user roles and responsibilities. Regular security assessments and penetration testing can help identify and address vulnerabilities in the system. Moreover, implementing robust data loss prevention (DLP) mechanisms can prevent sensitive data from leaving the controlled environment. The use of anonymization and pseudonymization techniques can further reduce the risk of data breaches by removing or replacing personally identifiable information. Finally, a well-defined incident response plan is essential to effectively manage and mitigate the impact of any security breaches.

Securing AI Models and Preventing Malicious Attacks

Securing AI models and preventing malicious attacks requires a proactive approach focusing on both model security and system security. Model security involves protecting the AI model itself from tampering or malicious manipulation. This can be achieved through techniques like model watermarking, which embeds unique identifiers into the model to detect unauthorized copying or modification. Regular model monitoring and retraining can also help detect and mitigate the impact of adversarial attacks, where malicious inputs are designed to manipulate the model’s output. System security involves protecting the infrastructure and systems that support the AI model, including the data storage, processing, and communication components. This requires robust cybersecurity measures, including intrusion detection and prevention systems, firewalls, and regular software updates to address known vulnerabilities. Furthermore, implementing a strong security culture within the organization is crucial to ensure that all employees are aware of and adhere to security best practices.

Human Oversight and Accountability

The increasing reliance on AI in financial data management necessitates a robust framework for human oversight and accountability. While AI offers significant advantages in speed and processing power, the inherent risks associated with algorithmic bias, errors, and security breaches demand continuous human intervention and validation to ensure data integrity and regulatory compliance. The complexity of AI systems makes assigning responsibility for errors challenging, highlighting the need for clear guidelines and auditing mechanisms.

The importance of human oversight stems from the limitations of AI itself. AI systems, however sophisticated, are trained on data and operate according to pre-programmed algorithms. They lack the nuanced judgment, contextual understanding, and ethical considerations that humans bring to the table. Consequently, human oversight is crucial for identifying and correcting biases, validating outputs, and ensuring the ethical and responsible use of AI in financial decision-making. Without it, the risk of significant financial errors and reputational damage increases substantially.

Challenges in Assigning Accountability for AI-Driven Errors

Attributing responsibility for errors or biases introduced by AI systems presents a significant challenge. The opaque nature of some AI algorithms, often referred to as “black box” models, makes it difficult to trace the source of an error. Is the error due to flawed data used for training, a defect in the algorithm itself, or a misinterpretation of the AI’s output by a human user? Establishing clear lines of accountability requires a multi-faceted approach, including well-defined roles and responsibilities for data scientists, AI developers, and financial professionals involved in the process. Furthermore, robust documentation of the AI system’s development, training, and deployment is essential for post-hoc analysis and investigation of any errors that arise. Legal frameworks are still evolving to address the unique challenges of assigning accountability in AI-driven systems, emphasizing the need for proactive measures to mitigate risk.

Robust Auditing Mechanisms for AI-Driven Financial Data Processes

Implementing robust auditing mechanisms is paramount for maintaining trust and transparency in AI-driven financial data management. These mechanisms should encompass the entire data lifecycle, from data collection and preprocessing to AI model training, deployment, and monitoring. Audits should verify the accuracy and completeness of data, assess the fairness and lack of bias in AI algorithms, and evaluate the effectiveness of controls designed to mitigate risks. Regular audits, performed by both internal and external experts, are essential for ensuring compliance with relevant regulations and industry best practices. The audit trails should be meticulously documented and easily accessible for review, allowing for thorough investigation of any anomalies or discrepancies detected. Moreover, the audit process itself should be subject to regular review and improvement to adapt to the evolving landscape of AI technologies.

Human Review and Validation of AI-Generated Financial Reports

A flowchart illustrating the process of human review and validation of AI-generated financial reports could look like this:

[Imagine a flowchart here. The flowchart would start with “AI Generates Financial Report,” branching to “Human Review for Accuracy and Completeness,” then to “Human Review for Bias Detection,” then to “Human Review for Compliance,” then to “Validation and Approval,” and finally to “Report Finalized and Released.” Each step would have a brief description of the actions involved. For example, “Human Review for Accuracy and Completeness” might involve comparing the AI-generated report to source data and identifying any discrepancies. “Human Review for Bias Detection” might involve checking for systematic overestimation or underestimation of particular items, while “Human Review for Compliance” would focus on ensuring adherence to relevant accounting standards and regulations. The flowchart would visually represent the sequential steps and decision points involved in ensuring the integrity of the final report.]

Integration of Legacy Systems

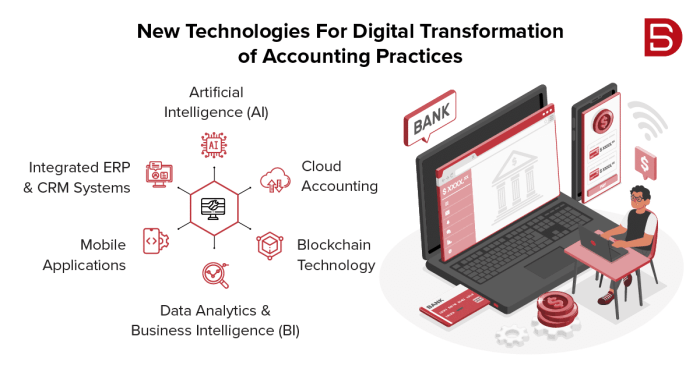

Integrating AI-powered systems with existing legacy financial data systems presents a significant challenge for organizations aiming to leverage the benefits of artificial intelligence in financial management. The complexities arise from differences in data structures, formats, and technologies used across these disparate systems. This often results in difficulties in data extraction, transformation, and loading (ETL) processes, hindering the seamless flow of information necessary for effective AI model training and deployment.

The process of migrating data between legacy and AI-driven platforms requires careful planning and execution to maintain data integrity. Inaccurate or incomplete data transfer can lead to flawed AI models, incorrect financial analysis, and ultimately, poor decision-making. Data cleansing, validation, and transformation are crucial steps to ensure data consistency and accuracy. The inherent differences in data governance, security protocols, and access controls between legacy and modern systems add further complexity to the integration process.

Data Migration Challenges and Mitigation Strategies

Successfully integrating legacy systems with AI platforms requires addressing several key challenges. A proactive approach, incorporating robust mitigation strategies, is essential for a smooth transition and the maintenance of data integrity.

- Challenge: Data Format Inconsistency: Legacy systems often use outdated data formats incompatible with modern AI systems. This necessitates data conversion and transformation, potentially introducing errors if not handled carefully.

- Mitigation: Implement a robust data transformation pipeline using ETL tools capable of handling various data formats. Employ automated data quality checks and validation rules to ensure data accuracy during conversion.

- Challenge: Data Silos and Lack of Interoperability: Legacy systems frequently operate as isolated silos, making data integration difficult. Lack of standardized APIs or data exchange protocols further complicates the process.

- Mitigation: Develop or adopt standardized APIs and data exchange protocols to enable seamless communication between systems. Implement a data integration platform to centralize data access and manage data flow across different systems.

- Challenge: Data Governance and Security Discrepancies: Legacy systems may have different data governance policies, security protocols, and access controls than AI systems. Ensuring compliance and maintaining data security during integration is crucial.

- Mitigation: Establish a comprehensive data governance framework that aligns with both legacy and AI system requirements. Implement robust security measures, including encryption and access control mechanisms, to protect sensitive financial data during migration and integration.

- Challenge: Data Cleansing and Validation: Legacy systems often contain inaccurate, incomplete, or inconsistent data. Cleaning and validating this data before integration is essential to ensure the reliability of AI models.

- Mitigation: Develop a comprehensive data cleansing and validation strategy, including automated data quality checks, outlier detection, and data imputation techniques. Utilize data profiling tools to identify and address data quality issues before integration.

Explainability and Transparency of AI Models

The increasing reliance on AI in financial data management necessitates a thorough understanding of how these models reach their conclusions. Opacity in AI algorithms can lead to mistrust, hinder effective risk management, and ultimately impede regulatory compliance. Transparency and explainability are crucial for building confidence in AI-driven financial decisions and ensuring accountability.

The challenge lies in the inherent complexity of many AI algorithms, particularly deep learning models, which often operate as “black boxes.” While these models can achieve high accuracy, understanding the specific reasoning behind their predictions can be incredibly difficult. This lack of transparency poses significant risks, especially in high-stakes financial applications where decisions can have substantial consequences. For example, an AI model used for credit scoring might deny a loan application, but without explainability, it’s difficult to determine whether the denial was justified or based on biased or flawed data.

Methods for Improving Model Interpretability

Improving the interpretability of AI models is paramount for building trust and ensuring accountability. Several techniques can enhance transparency. These include using inherently interpretable models like linear regression or decision trees, employing model-agnostic explainability methods such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) which provide insights into individual predictions, and designing models with built-in explainability features. Furthermore, focusing on feature engineering and data preprocessing to create more understandable input variables contributes significantly to model interpretability. The selection of appropriate algorithms and the careful design of the model architecture itself play a vital role.

Framework for Evaluating Explainability and Transparency

A robust framework for evaluating the explainability and transparency of AI models in financial data management should encompass several key aspects. First, it needs to assess the inherent interpretability of the chosen model. This involves considering the complexity of the algorithm and the ease with which its internal workings can be understood. Second, the framework should evaluate the availability and quality of explainability methods applied. Are these methods capable of providing clear and actionable insights into model predictions? Third, the framework should assess the comprehensibility of explanations generated by these methods. Are the explanations understandable to both technical and non-technical stakeholders? Finally, the framework should incorporate a human-in-the-loop evaluation to ensure that the explanations align with domain expertise and common sense. This might involve having financial experts review the model’s explanations and assess their plausibility and relevance. A scoring system, potentially incorporating weighted metrics for each of these aspects, could provide a quantitative assessment of the model’s explainability and transparency.

The evolving regulatory landscape

The rapid advancement of AI in finance presents significant challenges for regulators worldwide. Existing frameworks, designed for traditional financial systems, often struggle to adequately address the unique risks and opportunities presented by AI-driven data management. A critical need exists for a flexible and adaptable regulatory environment that fosters innovation while safeguarding financial stability and consumer protection.

The integration of AI into financial data management necessitates a reassessment of existing regulations. Current rules, focusing primarily on data privacy (like GDPR) and security (like CCPA), are insufficient to comprehensively address the complexities introduced by AI algorithms. These algorithms, often opaque in their decision-making processes, introduce new sources of risk that require a targeted regulatory response. The challenge lies in balancing the need for robust oversight with the potential stifling of technological progress.

Emerging Regulatory Challenges Related to AI in Finance

The application of AI in finance introduces several novel regulatory challenges. For instance, algorithmic bias can lead to discriminatory outcomes, necessitating regulations to ensure fairness and transparency. The explainability of AI models is another crucial concern; regulators are increasingly demanding that financial institutions be able to explain how AI systems arrive at their decisions, particularly when those decisions have significant consequences for consumers or the financial system. Further, the potential for AI-driven fraud and market manipulation requires a proactive regulatory approach to mitigate these emerging threats. Data security and privacy, while already regulated, take on new dimensions in the context of AI, necessitating updated guidelines to protect sensitive financial information processed by complex algorithms.

Comparison of Existing Regulations and AI Needs

Existing regulations, such as the Dodd-Frank Act in the US and the Markets in Financial Instruments Directive (MiFID) II in Europe, focus primarily on traditional financial risks. These regulations were not designed to address the unique risks associated with AI, such as algorithmic bias, lack of transparency, and the potential for manipulation. While some regulations, such as GDPR, address data privacy concerns, they do not fully capture the specific challenges of AI-driven data management, including the use of AI for profiling and automated decision-making. Therefore, a significant gap exists between the current regulatory landscape and the needs of AI-driven financial data management. A key difference lies in the emphasis on human oversight and accountability. Existing regulations often imply human involvement in critical decisions; however, the increasing autonomy of AI systems requires a re-evaluation of these assumptions.

Potential Future Regulatory Frameworks and Implications

Future regulatory frameworks for AI in finance are likely to focus on enhancing transparency, accountability, and fairness. This might involve requiring explainable AI (XAI) techniques, mandating audits of AI systems, and establishing clear lines of responsibility for algorithmic decisions. The potential implications include increased compliance costs for financial institutions, but also greater trust and confidence in AI-driven financial services. A phased approach, allowing for adaptation and learning, might be more effective than a drastic overhaul of the existing regulatory system. For example, regulators could focus initially on high-risk applications of AI, such as credit scoring and fraud detection, before expanding their scope to lower-risk areas.

Examples of Regulatory Bodies and Current Guidelines

Several regulatory bodies are actively developing guidelines for AI in finance. The Financial Stability Board (FSB) has published reports on the implications of AI for financial stability, while national regulators such as the Financial Conduct Authority (FCA) in the UK and the Office of the Comptroller of the Currency (OCC) in the US are developing specific guidance for financial institutions using AI. These guidelines often focus on issues such as model risk management, data governance, and consumer protection. For instance, the FCA has emphasized the importance of fairness, transparency, and accountability in the use of AI in financial services, while the OCC has focused on ensuring the safety and soundness of AI-driven banking operations. These initiatives demonstrate a growing recognition of the need for tailored regulatory frameworks to address the unique challenges of AI in finance.

End of Discussion

Ultimately, successfully navigating the challenges of managing financial data integrity in the age of AI requires a multi-pronged approach. This includes fostering a culture of transparency and accountability, investing in robust security measures, proactively addressing algorithmic bias, and adapting to the evolving regulatory landscape. By prioritizing human oversight, enhancing the explainability of AI models, and ensuring seamless integration with legacy systems, we can harness the power of AI while safeguarding the integrity of financial data and maintaining public trust.

Question & Answer Hub

What are the biggest risks associated with using AI in financial data management?

The biggest risks include algorithmic bias leading to inaccurate decisions, data breaches compromising sensitive information, and lack of transparency in AI models hindering accountability.

How can human oversight mitigate the risks of AI in finance?

Human oversight ensures accountability, allows for detection and correction of errors or biases, and provides a crucial check on AI-driven decisions, preventing potentially catastrophic consequences.

What are some strategies for ensuring data privacy when using AI in financial systems?

Strategies include robust encryption, anonymization techniques, compliance with data privacy regulations (like GDPR), and implementing strict access control measures.

How can we improve the explainability and transparency of AI models in finance?

Employing techniques like SHAP values, LIME, and developing simpler, more interpretable models can improve transparency. Clear documentation of model development and decision-making processes is also crucial.

Finish your research with information from How Automation is Changing the Future of Accounting.